Kubernetes Operator Deep Strategy

Link to the original (post date: 2020/09/25)

Summary: Operators have been a vital part of the Kubernetes ecosystem for years. Moving the administrative surface to the Kubneretes API facilitates a "single glass" experience. For developers looking to simplify their Kuberentes native applications, or DevOps practitioners looking to reduce the complexity of existing systems, Operator can be an attractive proposition. But how can we create an Operator from scratch?

Operator Deep Down

Operators are everywhere today. Databases, cloud-native projects, and anything complex to deploy and maintain in Kubernetes all come together. First introduced by CoreOS in 2016, these products encapsulate the idea of moving operational concerns to software. Instead of runbooks or other documents, Operators automatically execute actions. For example, the Operator can deploy instances of databases, upgrade database versions, and perform backups. And these systems can be tested and reacted faster than a human engineer.

Operator moves tool configuration to the Kubenretes API by extending the tool with custom resource definitions. This means that the Kubenretes itself will be "a piece of glass". It allows DevOps practitioners to leverage a rich ecosystem of tools built around Kubernetes API resources to manage and monitor deployed applications:

This approach also ensures uniformity between production, test, and development environments. If each is a Kubernetes cluster, you can use Operator to deploy the same configuration to each.

There are many reasons to create an Operator from scratch. It's usually a development team that creates a first-party operator for a product, or a DevOps team looking to automate the management of third-party software. Either way, the development process begins with identifying the cases that the Operator needs to manage.

The most basic Operator handles deployment. Creating a database in response to an API resource is as easy as kubectl apply. However, this is a bit better than the built-in Kubernetes resources like StatefulSets and Deployments. It's the more complex operations where Operators start to provide value. What if I want to grow my database?

With StatefulSet, you can kubectl scale statefulset my-db --replicas 3 and get 3 instances. But what if those instances require different configurations? Should one instance be designated as the primary and the others as replicas? What if there are setup instructions? In this case, the Operator can configure these settings with an understanding of the particular application.

More advanced Operators can handle features such as automatic scaling according to load, backups and restores, integration with metrics systems like Prometheus, and even failure detection and automatic tuning according to usage patterns. Any operation that has a traditional "Runbook" document can rely on automation, tests, and auto-responses.

Managed systems don't have to be in Kubernetes to benefit from Operators. For example, major cloud providers such as Amazon Web Services, Microsoft Azure, and Google Cloud offer Kubenretes Operators for managing other cloud resources such as object storage. This allows users to configure cloud resources in the same way they configure Kubernetes applications. Operations teams can take the same approach as any other resource and use Operator to manage anything from third-party software services via APIs to hardware.

This article will focus on the etcd-cluster-operator. This is an Operator that I contributed with many colleagues who manage etcd within Kubernetes. This article is not designed to introduce the Operator or etcd itself, so other than what you need to know to understand what the Operator is doing, see I won't go into detail.

So, etcd is a distributed key-value data store. It can be managed stably only in the following cases.

Additionally:

As you can see, there is more to management than a KubernetesStatefulSet can usually do, so we rely on Operators. I won't go into detail about the exact mechanism the etcd-cluster-operator uses to solve these problems, but I will refer to it as an example for the rest of this article.

Operator consists of two things:

Operators are typically containerized and deployed in the Kubernetes cluster that provides the service. I usually use a simple Deployment resource. In theory, the Operator software itself can run anywhere as long as it can communicate with the cluster's Kubernetes API. However, it's usually easier to run the Operator on a cluster you manage. Usually this is in a custom Namespace to separate the Operator from other resources.

If you are running the Operator using this method, you will need a few more things:

We'll talk more about the permission model and webhooks later.

The first question is language and ecosystem. In theory, almost any language that can make HTTP calls can be used to write Operators. Assuming you deploy to the same cluster as your resource, it should be able to run in an architectural container provided by the cluster. Typically this is linux/x86_64, which is what the etcd-cluster-operator is for, but building operators for arm64 and other architectures, or Windows containers Nothing prevents you from doing that.

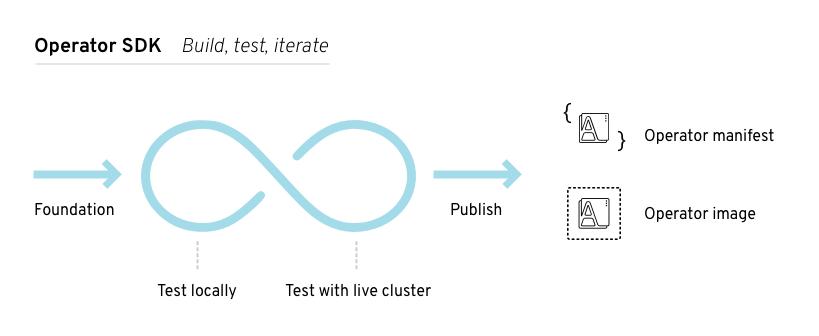

The Go language is generally considered to have the most mature tools. controller-runtime, the framework used to build controllers in the core of Kubernetes, is available as a standalone tool. Additionally, projects such as Kubebuilder and Operator SDK build on top of the controller runtime and aim to provide a simplified development experience.

There are general-purpose and specialized tools and projects for connecting to the Kubernetes API and building Operators in languages other than Go, such as Java, Rust, and Python. These projects have varying levels of maturity and support.

Another option is to interact directly with the Kubernetes API over HTTP. This requires the most manual effort, but allows your team to use the language they are most comfortable with.

Ultimately, this choice is up to the team that creates and maintains the Operator. If your team is already familiar with Go, the wealth of Go tools makes the choice clear. If your team is not already using Go, familiarize yourself with a less mature but underlying language at the expense of the learning curve and continued training to use Go for more mature ecosystem tools. It's a trade-off between the ecosystem you're in.

For etcd-cluster-operator, the team is already familiar with Go, so it was an obvious choice for us. Also, I chose to use Kubebuilder rather than the Operator SDK, due to my existing knowledge. The target platform was linux/x86_64, but Go can be built for other platforms if needed.

For the etcd Operator, I created a custom resource definition named EtcdCluster. Once the CRD is installed, users can create EtcdCluster resources. The top level EtcdCluster resource states that we want an etcd cluster to exist and provides its configuration.

apiVersion: etcd.improbable.io/v1alpha1kind: EtcdClustermetadata:name: my-first-etcd-clusterspec:replicas: 3version: 3.2.28apiVersion The string indicates that this is the version of the API (v1alpha1 in this case). kind declares this to be EtcdCluster. As with many other types of resources, you must include a name, namespace, labels, annotations >, and a metadata key that can contain other standard items. This allows EtcdCluster resources to be treated like any other resource in Kubernetes. For example, you can use labels to identify the team responsible for the clusters, and search for those clusters with kubectl get etcdcluster -l team=foo just like you would for standard resources.

The spec field is where operational information about this etcd cluster resides. There are many fields supported, but only the most basic are shown here. The version field describes the exact version of etcd that should be deployed and the replicas field describes how many instances should exist .

There is also a status field not shown in the example. This field is updated by the Operator to describe the current state of the cluster. Using the spec and status fields is standard in the Kubernetes API and integrates well with other resources and tools.

Since you are using Kubebuilder, you will find information that will help you generate these custom resource definitions. Kubebuilder creates a Go struct that defines the spec and status status fields.

type EtcdClusterSpec struct { Versionstring`json:"version"` Replicas *int32`json:"replicas"` Storage*EtcdPeerStorage`json:"storage,omitempty"` PodTemplate *EtcdPodTemplateSpec `json:"podTemplate,omitempty" "`}From this Go struct, and similar structs for status, Kubebuilder provides tools for generating custom resource definitions and implement the hard work of This allows you to simply write code to handle the Reconciler Loop.

Other languages may have different support for doing the same thing. If you're using a framework designed for Operators, this could be generated. For example, the Rust library kube-derive works in a similar way. If your team uses the Kubernetes API directly, you'll need to write code to parse the CRD and its data separately.

Now that we know how to describe an etcd cluster, we can build the Operators that manage the resources that implement it. An Operator can do anything, but almost all Operators use the Controller pattern.

The controller is a simple software loop, often called a "match loop", that performs the following logic:

For Kubernetes Operators, the desired state is the spec field of the resource (EtcdCluster in this example). Managed resources can be anything internal or external to the cluster. This example creates other Kubneretes resources such as ReplicaSets, PersistentVolumeClaims, and Services.

For etcd in particular, contact the etcd process directly to get its status from the management API. This out-of-Kubernetes access is a bit tricky as disruption of network access may not mean service down. This means that you can't use the inability to connect to etcd as a signal that etcd is not running (if you restart a working etcd instance it can make network outages worse) ).

In general, when communicating with services other than the Kubernetes API, it is important to consider what the availability or consistency guarantees are. For etcd, if you get an answer you'll find it very consistent, but other systems may not behave this way. It is important not to make the outage worse by taking wrong actions as a result of outdated information.

The simplest controller design is to rerun the matching loop periodically, say every 30 seconds. This works, but has many drawbacks. For example, I need to be able to detect if a loop is still running from the last time so that I don't run two loops at the same time. Additionally, this means a full Kubernetes scan of relevant resources every 30 seconds. Next, for each instance of EtcdCluster, we need to run a match function that lists the associated Pods and other resources. This approach places a heavy load on the Kubernetes API.

This also encourages a very "procedural" approach. Each loop tries to do as much as possible, because the next match can take a long time. For example, create multiple resources at once. This can lead to complex situations where the Operator has to perform many checks to know what to do, and is more likely to introduce bugs.

To deal with this, the controller implements several functions:

All of this combined reduces the cost of executing a single loop and the amount of time spent waiting, resulting in efficient execution in each loop. As a result, you can reduce the complexity of your matching logic.

Instead of scanning on a schedule, the Kubernetes API supports "watches". If an API consumer can register an interest in a resource or class of resources and be notified when matching resources change. This means that Operators can be idle most of the time to offload requests, and that Operators respond to changes almost instantly Frameworks for Operators typically handle registering and managing watches To do.

Another consequence of this design is that the resources you create must also be monitored. For example, if you create Pods, you should monitor the Pods you create. This is so that they can be notified, woken up, and corrected if they are deleted or modified in a way that does not match the desired state.

As a result, we can take the simplicity of our match functionality one step further. For example, in response to EtcdCluster, the Operator wants to create a Service and some EtcdPeer resources. Instead of creating them all at once, create the Service first and then exit. However, since it monitors Services itself, it will immediately trigger a match. At that point you can create a Peer. Otherwise, it would create several resources and then reconcile once per resource, triggering many more reconciliations.

This design helps keep the matching loops very simple. By performing only one action and exiting, it eliminates the need for complex states for developers to consider.

The main consequence of this is that updates may be missed. Network interruptions, OperatorPod restarts, and other issues can cause events to be missed in some situations. To address this, it's important to work in terms of operators being "level-based" rather than "edge-based".

These terms are taken from signal control software and refer to acting on the voltage of a signal. In our world, "edge-based" means "response to an event", and "level-based" means "response to an observed state".

For example, if a resource is deleted, you might monitor the delete event and choose to recreate it. However, if it misses the delete event, it may not try to recreate it. Or, even worse, it fails later on assuming it still exists. Instead, the "level-based" approach simply treats the trigger as an indication that it should be matched. Rematch the external state and discard the actual context of the change that triggered it.

Another major feature of many controllers is request caching. Requesting Pods with a match and then triggering again after 2 seconds may retain the cached results of the second request. This reduces the load on the API server, but there are additional considerations for developers.

Requests for resources can be outdated and need to be processed. Specifically resource creation is not cached, so the following situations can occur:

You accidentally created a duplicate Service. The Kubernetes API handles this correctly and gives an error that it already exists. As a result, we have to handle this case. In general, it's best to simply back off and rematch at a later date. In Kubebuilder this happens by simply returning an error from the match function, but different frameworks may differ. If rerun later, the cache may eventually be made consistent and the next phase of reconciliation can occur.

One side effect of this is that all resources must be given deterministic names. Otherwise, if you create a duplicate resource, you might use a different name and it might actually be a duplicate.

In some circumstances, you may trigger many matches at about the same time. For example, if you are monitoring a large number of Pod resources, and many of them are down at the same time (e.g. node failure, admin error, etc.), you can expect to receive notifications multiple times. increase. However, by the time the first reconciliation is actually triggered and the cluster state is observed, all Pods are already gone. Therefore, no further matching is required.

If the number is small, this is not a problem. However, in large clusters processing hundreds or thousands of updates at a time, repeating the same operation 100 times in a row can slow down crawling of matching loops or cause the operator to crash due to a full queue. There is a risk of

Since the matching function is "level-based", optimizations can be made to handle this. When queuing an update for a particular resource, if an update for that resource is already queued, the resource can be deleted. Combined with waiting before reading from the queue, you can effectively "batch" operations. So, depending on the exact conditions of the Operator and its queue configuration, if 200 Pods all die at the same time, it may only perform one reconciliation.

Everything that accesses the Kubernetes API must provide credentials to access it. Within the cluster, this is handled using the ServiceAccount under which the Pod runs. ClusterRole and ClusterRoleBinding resources can be used to associate permissions with ServiceAccount. Especially for Operators, this is important. Operators need privileges to get, list, and watch the resources they manage across the cluster. Additionally, you'll need broad permissions to all the resources you might create accordingly. For example, Pods, StatefulSets, Services, etc.

Frameworks such as Kubebuilder and Operator SDK can provide these permissions. For example, Kubebuilder takes a source annotation approach and assigns permissions per controller. When multiple controllers are merged into one deployed binary (as is the case with etcd-cluster-operator), the permissions are merged.

// +kubebuilder:rbac:groups=etcd.improbable.io,resources=etcdpeers,verbs=get;list;watch// +kubebuilder:rbac:groups=etcd.improbable.io,resources=etcdpeers /status,verbs=get;update;patch// +kubebuilder:rbac:groups=apps,resources=replicasets,verbs=list;get;create;watch// +kubebuilder:rbac:groups=core,resources=persistentvolumeclaims,verbs =list;get;create;watch;deleteThis is the permission for matching the EtcdPeer resource. get, list, watch own resources, update and the status of sub-resources You can see that patch can be applied. This allows you to update only the status and display information to other users. Finally, they have broad permissions over the resources they manage, allowing them to create and delete resources as needed.

While the custom resource itself provides some level of validation and defaulting, it's up to the Operator to perform more complex checks. The simplest approach is to do it when the resource is loaded into the Operator. such as when returned from a watch, or just after being read manually. However, this means that the default does not apply to the Kubernetes display, which can confuse administrators.

A better approach is to use webhook configuration validation and mutating. These resources tell Kubernetes that a webhook should be used when the resource is created, updated, or deleted before it can be persisted.

For example, you can use a mutating webhook to run the default. Kubebuilder provides some additional configuration to create a MutatingWebhookConfiguration and Kubebuilder takes care of providing API endpoints. All we write is the Default method of the spec struct that makes it the default. Then when that resource is created, the webhook is called to apply the default settings before the resource is persisted.

However, the default should be applied to reading the resource. Operators cannot make assumptions about the platform to know if webhooks are enabled. Even so, it could be misconfigured, network outages skipping webhooks, or resources being applied before webhooks are configured. All of these issues mean that while webhooks provide a better user experience, they can't be relied upon by Operator code and default settings must be redone.

While individual units of logic can be unit tested using the language's usual tools, integration testing poses certain problems. You might be tempted to think of the API server as a simple database that you can mock. However, in a real system, the API server performs a lot of validation and defaulting. This means that testing and actual behavior may differ.

Roughly speaking, there are two main approaches:

In the first approach, the test harness downloads and runs the kube-apiserver and etcd executables to create a working API server (The use of etcd here has nothing to do with the fact that the sample Operator manages etcd), of course there is no Kubernetes component that creates Pods here ReplicaSet< You can also create /code>. So it doesn't actually do anything.

The second approach is much more comprehensive and uses a real Kubernetes cluster. Pods can run and respond correctly. This kind of integration testing is much easier with kind. The project is short for "Kubernetes for Docker" and allows you to run a full Kubernetes cluster anywhere you can run a Docker container. It has an API server, can run Pods, and runs all the main Kubernetes tools. As a result, tests using kind can be run on a laptop or CI, providing near-perfect behavior of a Kubernetes cluster.

Many ideas were touched upon in this article, but the most important ones are:

These tools allow you to build Operators to simplify deployments and reduce the burden on your operations team. Whether it's your own application or an application you've developed.

About the author

James Laverack works as a Solutions Engineer at Jetstack, a UK-based Kubernetes professional services company. He has over 7 years of industry experience and spends most of his time helping companies on their cloud native journey. Laverack is also a Kubernetes contributor and has been on the Kubernetes release team since version 1.18.

!["Rucksacks and backpacks" best-selling ranking Coleman and North Face are popular [June 2021 edition]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/29/a9647069022c61ee44fb85806ae07d8b_0.jpeg)