How to control crawl budget (Part 1) Basics of crawl budget and causes of problems

This week's Whiteboard Friday covers a concept for advanced SEOs: the crawl budget. Google has a finite amount of time to crawl your site, so it's a subject to watch out for if you have indexing issues -- especially on large or frequently updated sites.

Hello Moz fans. Today's theme is "Crawl Budget".

First of all, I should say that this theme is more for advanced SEO, mostly for larger websites. However, if this doesn't apply to you, I think there's a lot to learn in terms of SEO theory that comes up when thinking about tactics and diagnostics that might apply to your crawl budget.

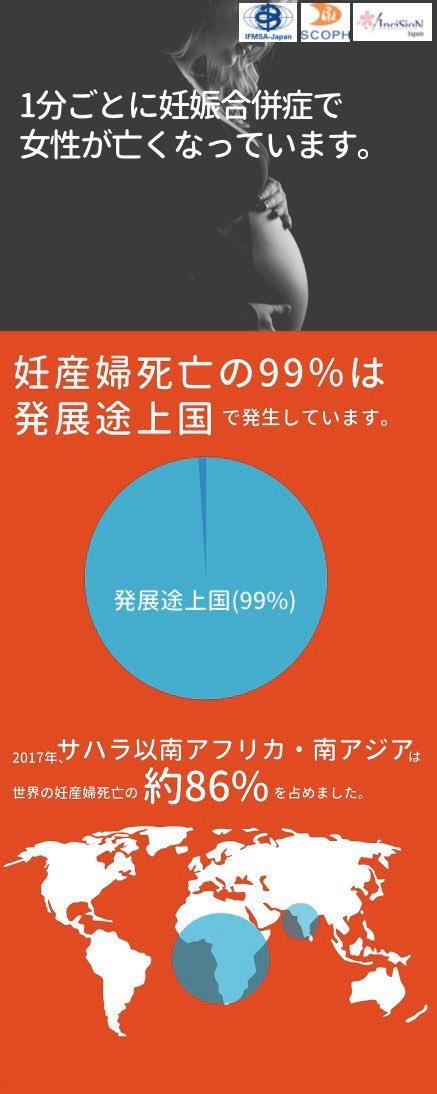

However, Google's documentation states that when you should care about crawl budget:

Obviously this is a rather harsh or arbitrary threshold. In my opinion, even if the above conditions do not apply,

Both should be considered crawl budget issues.

What is a crawl budget?

What exactly is a "crawl budget"?

Crawl budget is "the amount of time Google is willing to spend crawling a site." Google may seem omnipotent. But in fact

So even Google needs some sort of prioritization to decide how much time or resources to allocate to crawling each website.

Currently, the priority is based on two things (at least that's what Google says):

Googlebot is kind of hungry for new and unseen URLs.

In this video,

Don't talk about. not,

Look at the. In any case, this is generally easier.

Causes of Crawl Budget Problems

In what cases does crawl budget actually become a problem?

The main problem with sites that can lead to crawl budget problems is first and foremost "faceted navigation".

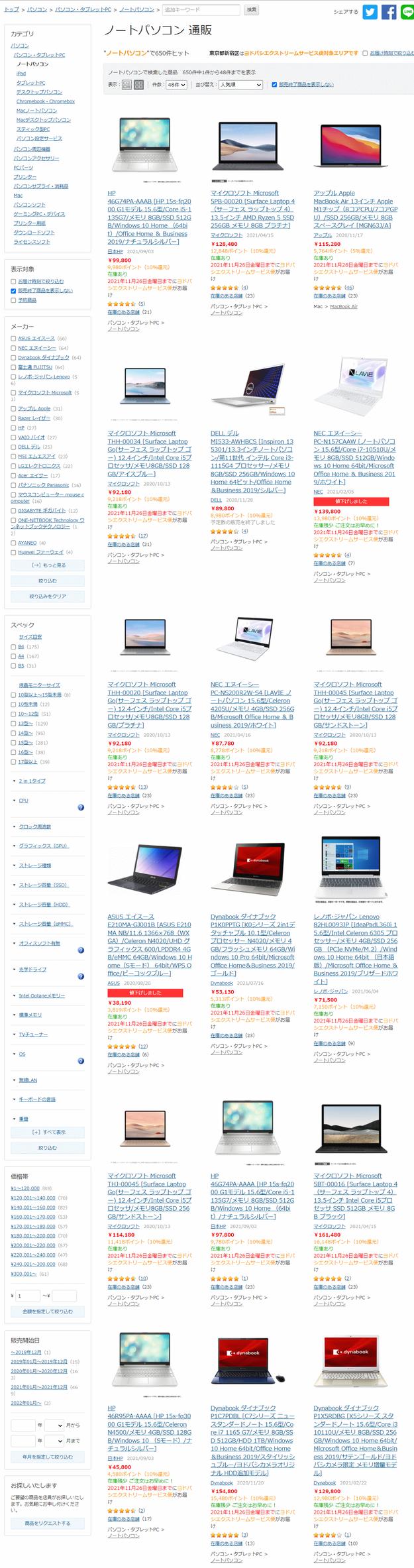

Think of an e-commerce site. Let's say there's a laptop page there. Filtering by screen size is assumed. If you narrow it down to "15 inch screen" and "16 GB memory", there will still be many combinations, so even if it's actually one page or one category (notebook page here), the URL is The number can be huge.

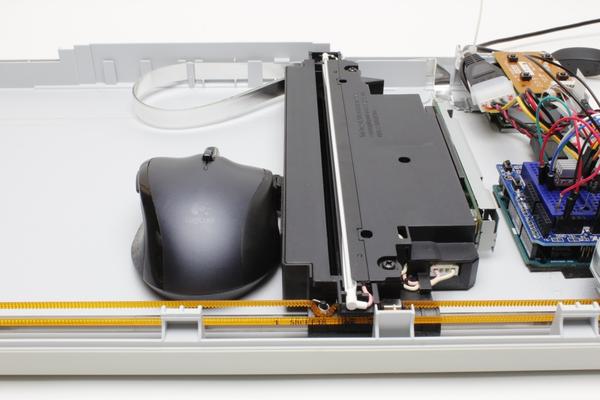

An example of faceted navigation (Yodobashi.com category page, sidebar on the left side of the screen), and an enlarged image showing that there are more faceted navigationsIn addition, different URLs are created by changing the display order be done. Even though it is exactly the same page, there are cases where crawling is necessary because the URL is different. In addition to rearrangement, there are countless causes of URL variations such as pagination. This can result in a huge number of URLs for a single category page.

There are a few other issues that come up often. The first is the search results page of the site search. This can result in a large number of URLs, especially if there is pagination.

So are listing pages (especially UGC posts). Things like job boards and eBay probably have a lot of pages.

Allowing users to upload listings and content can lead to a bloated number of URLs over time.

How to Address the Crawl Budget Problem

What methods can be used to address these issues and make the most of the crawl budget? We will discuss each of the following:

For reference, consider Googlebot's behavior for regular URLs. Crawling is possible, so it's OK, indexing is possible, so it's OK, and PageRank is also passed, so it's OK.

If you link to a normal URL somewhere on your site, Google will follow the link and index the page. For example, there should be no problem if you link from the top page or global navigation.

So the links that lead to these pages are actually kind of circular. If you link a lot to different pages, different filters, there is a dilution loss with each link. But eventually it will cycle. PageRank doesn't disappear into a black hole.

Now consider the opposite of the most extreme solution to the crawl budget problem: the robots.txt file.

When a page is blocked (specified as Disallow) by robots.txt, the page cannot be crawled. Great, problem solved... not. This is because robots.txt may not work exactly as specified.

Technically, sites and pages blocked by robots.txt may also be indexed. We've all seen it. Sites and pages that do not display meta descriptions because they are blocked by robots.txt etc. may appear on the search results page.

Example of a URL blocked by robots.txt displayed on a search results pageI said that technically, pages blocked by rotots.txt might also be indexed, but in practice won't rank for anything useful at least. But such pages do not pass PageRank. Linking to these pages will still pass PageRank to them, but if they're blocked by robots.txt, PageRank won't pass.

PageRank began to leak, creating a black hole. In that sense, it's an easy-to-implement but fairly crude solution.

This article will be delivered in two parts.

In the next part, which will be the second part, we will look at how to use the remaining "link-level nofollow," "noindex, nofollow," "noindex, follow," "URL normalization tags," and "301 redirects" among crawl budget measures.

!["Rucksacks and backpacks" best-selling ranking Coleman and North Face are popular [June 2021 edition]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/29/a9647069022c61ee44fb85806ae07d8b_0.jpeg)