How to control crawl budgets (Part 2) Copy and practice the crawl budget problem

This article is divided into two times in front and rear.

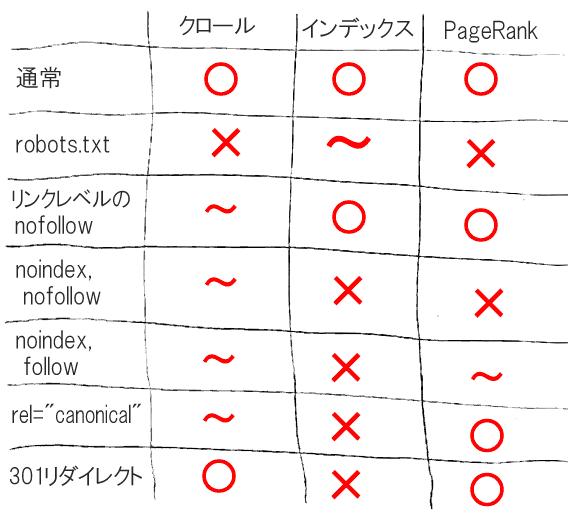

This is the second part, which is the second part of the crawl budget measures, and let's look at how to use the remaining "Nofollow", "NOINDEX, NOFOLLOW", "NOINDEX, FOLLOW", "URL Normalization Tag", and "301 Redirect".

Finally, I will introduce the latest ways using a site map.

→ First, read the first part

(再掲)(再掲)How to deal with the crawl budget problem (continued)

リンクレベルのnofollow、これはページHTMLでリンクのためのa要素にrel="nofollow"属性を指定することだ。

What I would like to say is that if there is a link to the category page of the laptop PC, such a facet is linked, installing a Nofollow REL attribute on the site has advantages and disadvantages.。

What can be used well is the case of listing (posted) by UGC.Suppose you run a used car website and have a huge variety of used cars, like an individual product list.It depends on the size of the site, but you may want Google to waste time to crawl individual listings.

However, sometimes the number of cars owned by celebrities, such as uploading cars owned, and uploading rare cars, may begin to collect media links.Robots.I don't want to do the blocks on the TXT page because the external links are wasted.If you do, do not follow the link inside the site to such a page.By doing so, crawl is possible, but only when it is found, Google finds it in other ways, such as an external link.

So crawls are in the middle.Technically, Nofollow has recently been treated as hints.Experience shows that Google does not crawl on pages that are only linked through a link in the site with Nofollow attribute.But when the page is found in other ways, Google definitely crawls.Nevertheless, it is generally an effective way to restrict crawl budgets, and it can be said that crawl budgets are more efficient.So the page can be indexed.

It is the desired state to be able to pass Pagerank properly.However, there is a Pagerank that is lost through the Nofollow link.It is still considered a link, and if it is a Follow link, the Pagerank sent in will be lost.

Specifying "NOINDEX, Nofollow" in the meta Robots tag in the HTML HEAD element on the page is a familiar solution for the e -commerce page.

In this case, Google crawls the page.However, when Google reaches the page, he finds noindex specification.Then, crawling the NOINDEX page does not make much sense, so the frequency of crawls will drop significantly in the long term.In other words, this case is also in the middle of crawls.

Naturally, no index.Because it's noindex.

I will not hand over Pagerank.Although Pagerank may be passed, it does not pass the Pagerank received to other URLs because it specifies Nofollow.This is not very good.

The disadvantage of this is the point of compromise required to save crawl budgets.

So many people once thought:If NOINDEX and FOLLOW will be a solution to both good things.

If you specify "NOINDEX, NOFOLLOW" in the meta Robots tag in the HTML HEAD element on the page, you will get the benefits of crawls in the same way, so everyone will smile.

New duplicate pages that do not want to index are not indexed, but still solve Pagerank problems.

However, a few years ago, Google said:

Well, we didn't know, but these pages actually gradually reduce crawls.So, you will eventually stop seeing the link, and eventually it will not be a crawl target.

In other words, it didn't work as a way to keep Pagerank anymore, and eventually hinted that it would eventually be treated as the same as the NOINDEX and NOFOLLOW case.As a result, this method cannot be relied on very much.

Then, the best thing in all aspects may be the URL normalization tag.In the HTML HEAD element of each page, the LINK element is specified in the REL attribute to specify a normalization URL.

If you use a URL normalization tag, the crawl frequency will only be reduced a little over time.wonderful.You can get the index.wonderful.Still, Pagerank can be handed over.

It looks wonderful.It seems to be perfect in many situations.

However, only "pages that are similar to Google to be duplicated and respect the URL normalization tag" are well functioned.If Google doesn't seem to be duplicated, you may need to return to the use of NOINDEX.

Also, in the case of a URL that seems to be not even a reason for existence, it is unknown how these strange combinations were created, but it seems to be meaningless.

I won't link to this page anymore.Nevertheless, if there is still a person who finds URL, 301 redirect can be used as one of the simple methods, resulting in a fairly good result.In short, all access to the URL is forcibly transferred to other URLs.

Even if Google occasionally comes to check, it doesn't have to look at the page because it follows the instructions of 301 redirect anyway.So, to save crawl budgets, it should be better than URL normalization tags or Needex.

The index problem is resolved, and Pagerank passes.However, of course, there is a trade -off, and the user will not be able to access the URL, so it is necessary to understand this point.

Practical points for crawl budget measures

When all tactics are organized, how do you actually use it?What kind of measures do I recommend for those who want to practice crawl budget measures?

Roughly, the following seven come to mind:

Let's explain each.

One of the most difficult measures to understand is improving the reading speed of the page.As I have already mentioned, the time and resources for crawls are determined, assigned to Google sites.That means

Then, the number of pages that can be crawled at the same time (resource) simply increases.

Therefore, improving the reading speed of the page is a great approach to improving the crawl situation.

Next is the log analysis (an old -fashioned method).It's hard to understand which page of the site is actually taking a crawl budget and which parameters are.Large sites can often get surprising results by analyzing logs, so we recommend them.

"Log analysis" here is not an access analysis using Google Analytics.An analysis of the "Web Server Log File" where GoogleBot access is recorded.

Through the analysis of such log files, we will actually apply measures as introduced in this article.

301 redirect can be used for users that users do not need to see it.This is an effective measure.

301 redirect cannot be used for variations that users need to see.for that reason,

Consider such a method.

However, it may be better to avoid the link itself in the first place so that the Pagerank passed to a page specified by REL = "Canonical" or Noindex is not lost due to diluting or dead ends.

Robots.TXT and Nofollow tactics should be used extremely modestly.

Because (I mentioned it a little at the time of introduction), Pagerank's dead end occurs.

Finally, there is the latest interesting information that I learned from Oliver H. G. Mason's blog post a while ago.According to him, on the site map of the site

If you only describe it, Google will often crawl the site map (Googlebot is hungry for such fresh content, as already mentioned).

With this tactic, crawl budgets are assigned to a new URL and everyone can smile.Googlebot is looking for a fresh URL anyway.You probably want GoogleBot to see the fresh URL anyway.Then, installing a site map limited to such a purpose is an effective and easy way to practice it.

◇◇◇That's it for this time.Was it a useful story for everyone?If you have any new information or problems, please let me know on Twitter.I am very interested in how other people are working on this theme.

!["Rucksacks and backpacks" best-selling ranking Coleman and North Face are popular [June 2021 edition]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/29/a9647069022c61ee44fb85806ae07d8b_0.jpeg)